Each minute of our life is a lesson but most of us fail to read it. I thought I would just add my daily lessons and the lessons that I learned by seeing the people around here. So it may be useful for you and as memories for me.

Over the last several years working in healthcare technology, I’ve had a front-row seat to the industry’s growing relationship with artificial intelligence. From primary care analytics and NHS interoperability work to leading AI-driven product development, I’ve watched organisations move from curiosity to urgency. Today, everyone—from commissioners to clinicians to software vendors—is trying to figure out how to incorporate AI into their workflows, transform patient experience, or simply avoid falling behind.

But the truth is more complicated: healthcare is adopting AI faster in ambition than in capability. I’ve seen teams want “an AI solution” before fully understanding the problem. I’ve watched pilots stall because the workflow wasn’t ready, governance wasn’t aligned, or the data simply couldn’t support the outcome. I’ve also seen the opposite—cases where well-structured frameworks led to safe, meaningful, and measurable improvements in clinical care and operational efficiency.

AI is not ruling healthcare; it’s reshaping the foundations we build on. And in this rush, the organisations that succeed aren’t the ones using the most sophisticated models, but the ones using the most disciplined frameworks.

That’s why I consistently return to a core set of AI frameworks. They anchor the excitement, provide guardrails for safety and governance, and force clarity on what we are actually trying to achieve. In my own work—whether integrating SmartLife products with NHS systems through IM1, shaping data-driven decision tools, or preparing analytics platforms for AI-powered features—these frameworks have proven invaluable.

Healthcare loves acronyms almost as much as it needs transformation. Every week, someone asks me the same question: “Where do we even start with AI in our organisation?”

It’s the right question—but often the wrong mindset. Many leaders assume AI adoption is a single decision, when in reality it’s a layered change-management journey involving clinical safety, governance, workflow redesign, regulatory alignment, analytics infrastructure, and—most underestimated of all—human behaviour.

Working across primary care data platforms, NHS integrations (IM1, PFS, Bulk Extract), analytics products, and clinical pathways, I’ve learned that choosing the right frameworks is less about picking the trendiest one and more about clarifying what problem you’re actually solving. Are you validating an early prototype? Deploying an AI decision-support tool? Creating an enterprise AI strategy? Evaluating risk? Measuring real-world impact?

Different problems require different lenses.

Below is a curated, experience-tested set of frameworks I use when guiding organisations through AI adoption—from ideation to implementation to evaluation. I’ve added commentary on how each aligns with real operational constraints we face in the NHS and primary care.

This article outlines 12 AI frameworks I rely on and why they matter. Whether you’re evaluating your first model or planning a system-wide adoption strategy, these will help you cut through the hype and focus on what works.

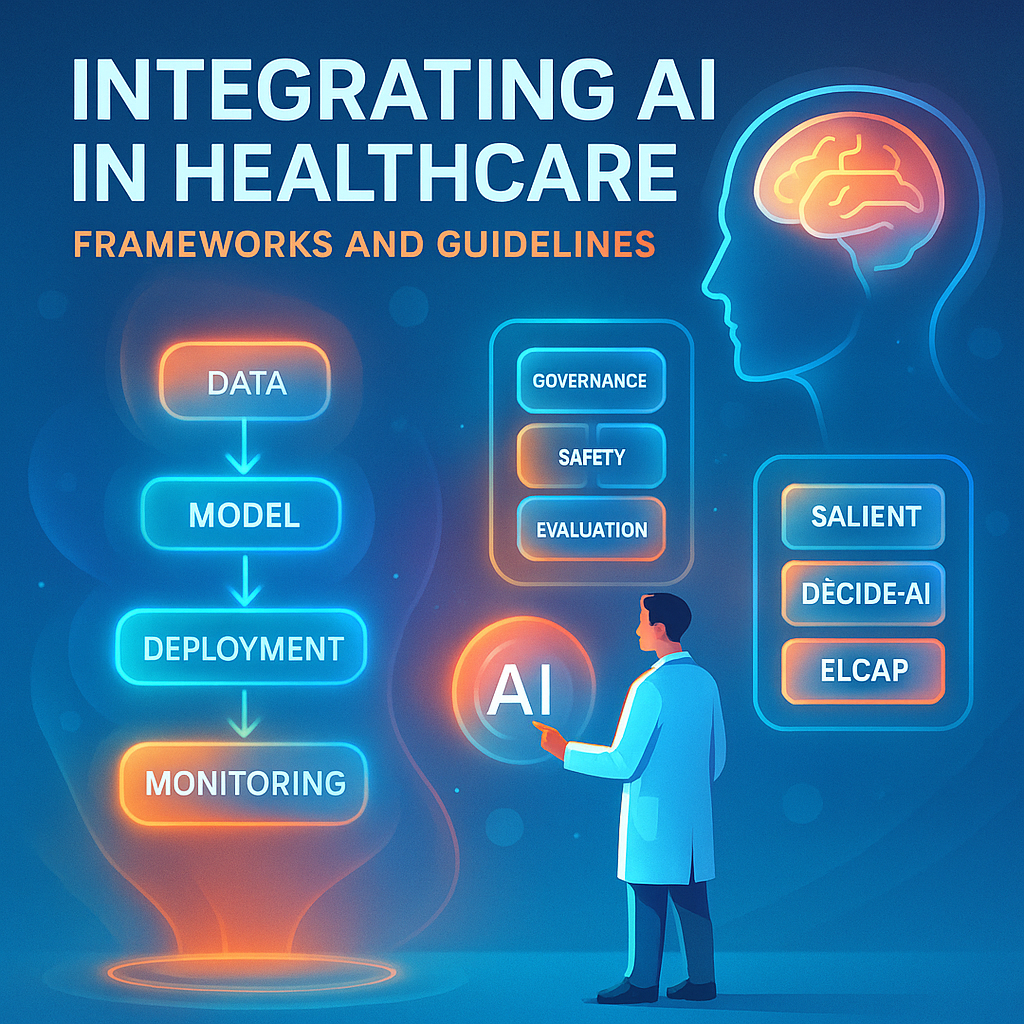

1. ELCAP: ESMO Guidance for Large Language Models in Clinical Practice

A pragmatic guide for clinically safe use of LLMs.

Where it helps in practice:

Use this as your clinical acceptance criteria when piloting GPT-style tools for triage, documentation, coding support, or patient communication. It’s strong at articulating risks clinicians care about (hallucination, ambiguity, trust).

2. QUEST: Human Evaluation Framework for LLMs in Healthcare

Focuses on human–AI interaction quality.

Why it matters operationally:

Most AI failures aren’t algorithmic—they’re behavioural. QUEST is excellent for assessing whether staff can meaningfully use your tool and whether it improves decision clarity rather than adding cognitive load.

3. FUTURE-AI: Best Practices for Trustworthy Medical AI

A gold-standard reference for governance, fairness, transparency, and safety.

My take as a product lead:

This is where your SCAL, DPIA, clinical safety case, and hazard logs belong. It gives your governance team a shared vocabulary and stops “trust” from becoming a buzzword.

4. TEHAI: Evaluation Framework for Implementing AI in Healthcare Settings

A structured evaluation framework for assessing readiness and impact.

In the real world:

TEHAI helps you pressure-test whether your AI system can survive the organisational ecosystem—data flows, workflow integration, interoperability, clinical oversight, commissioning requirements, etc.

Good to use before an ICB deployment or scaling beyond a pilot practice.

5. DECIDE-AI: Guideline for Early Clinical Evaluation of AI Decision Support Systems

Designed for early-stage testing and feasibility assessments.

When I use it:

During prototype and pilot phases—especially when validating AI-driven recommendations, personalised care plans, risk scores, or decision trees before exposing clinicians to them.

6. SALIENT: Framework for End-to-End Clinical AI Implementation

One of the few frameworks that covers reality end-to-end.

Why it’s underrated:

It doesn’t just say “implement AI”—it shows the sequence:

data → model → workflow → evaluation → monitoring → governance.

It’s closest to how I run AI integration projects in practice.

7. AI Evidence Pathway for Trustworthy AI in Health

A more policy-oriented framework championed for national-scale deployments.

Where it fits:

Essential for organisations engaging with NHS England, regulators, ICBs, or multi-site implementations. Strong alignment with UK assurance processes.

8. FURM: Evaluating Fair, Useful, and Reliable AI Models in Health Systems

A deceptively simple but powerful framing.

Why leaders should care:

Most organisations over-index on accuracy, under-index on fairness, and ignore usefulness entirely. FURM centres actual clinical and operational utility.

9. IMPACTS Framework: Evaluating Long-Term Real-World AI Effects

Focuses on post-deployment—where most frameworks end prematurely.

Critical insight:

The goal isn’t to launch AI. The goal is to sustain value and avoid regressions. IMPACTS helps measure whether your deployed solution meaningfully changes outcomes, costs, or experience.

10. Process Framework for Successful AI Adoption in Healthcare

A meta-framework showing the organisational capabilities required:

stakeholder alignment, workflow fit, policy readiness, training, trust-building.

My take:

This is the antidote to “AI as an IT project.” AI success is organisational, not technical.

11. The “3L Model”: Latency, Liability, and Literacy

A brutally practical test before deploying any AI tool in a real clinical environment.

Ask:

- Latency – Is the AI fast enough to fit the workflow? Slow = unusable.

- Liability – Who is accountable for the decision? If the answer is unclear, the deployment is premature.

- Literacy – Do staff understand what the AI can’t do? Most clinicians don’t need to know how AI works—but they must know where its limits lie.

This model has saved several projects I’ve worked on from misaligned expectations.

12. The “Integration Trifecta”: Data → Workflow → Governance

Every AI implementation that fails usually breaks on one of these:

- Data: Is the data clean, real-time, coded, and interoperable?

- Workflow: Does it reduce clicks, not add them?

- Governance: Is safety, oversight, and auditability built in from day one?

In our IM1 and SCAL work, this trifecta is the backbone:

data extraction and mapping, API integration, safety case review, hazard logging, and clinical sign-off all flow through these gates.

What I’ve Learned Building AI in Healthcare

Leading AI initiatives in primary care analytics and NHS interoperability has taught me a few uncomfortable truths:

- Most organisations want AI but haven’t articulated the problem it should solve.

- Governance teams want safety; clinicians want usefulness; leadership wants ROI. AI rarely satisfies all three at once without deliberate design.

- You cannot “bolt on” AI to a broken workflow—AI amplifies workflow issues.

- The gap between pilot success and system-wide adoption is bigger than most leaders predict.

- Evaluation frameworks don’t replace judgement—they sharpen it.

Frameworks don’t give you answers; they give you structure. The thinking still has to be yours.

Which Framework Should You Start With?

Here’s a simple test:

- Building a prototype? → DECIDE-AI + QUEST

- Preparing for pilot? → SALIENT + TEHAI

- Aiming for clinical deployment? → ELCAP + FUTURE-AI

- Scaling across an ICB or enterprise? → AI Evidence Pathway + IMPACTS

- Ensuring fairness and trust? → FURM

- Want an operational sanity check? → Integration Trifecta + 3L Model

If you wanna share your experiences, you can find me online in all your favorite places LinkedIn and Facebook. Shoot me a DM, a tweet, a comment, or whatever works best for you. I’ll be the one trying to figure out how to read books and get better at playing ping pong at the same time.